By OZeWAI member Andrew Downie, Accessibility Consultant.

Introduction

Amidst all the discussion and controversy over so-called AI (artificial intelligence) this is a very positive story. We all know (he wrote optimistically) that images should be accompanied by meaningful alternative text. Those of us who use screen readers know that many images lack text descriptions or, sometimes worse, include alt text that serves only to distract and/or mislead. For example, images in articles in one site I visit regularly all have the same alt text, the title of the article.

After that digression, on with the positive. A raft of apps have emerged/evolved recently that can literally provide detailed descriptions of images. This is not a formal review of any of them. Instead, it is a celebration of what is currently possible and musings about even more to come. Some offerings are mainstream products. Others have been specifically intended for use by blind or low vision users of computers and phones, latching on to the same technology used by the big players. The latter will be the main focus of these ramblings.

Some examples

Be My AI

Be My Eyes was the brain child of Hans Jørgen Wiberg, a Danish furniture maker. The initial concept was that a blind person could connect with a volunteer through an app on the phone. Via the phone’s camera, the volunteer provides relevant information. This can range from colour of clothing to readouts on diverse equipment.

In 2023, Be My AI was launched following a thorough beta cycle. The user takes a photo of anything from a document to a landscape and receives a remarkably detailed description. The user can then ask for more information about a particular aspect of the scene.

Seeing AI

For some years Microsoft has made the Seeing AI app freely available on the iPhone. It offers a suite of really helpful tools, the one discussed here being Scene. Like Be My AI, one can take a photo. Alternatively, locate a photo on the phone. Initially a relatively brief description is provided. Tap the More Info button for a more comprehensive description. Explore Photo involves moving a finger around the screen and having items announced, good for understanding relationships.

Screen readers

The JAWS screen reader has included Picture Smart for a while. In the most recent release this has been expanded to harness the power of several AI-based sources. Meanwhile, there is an add-on for NVDA called AI Object Describer. It utilises the GPT4 vision artificial intelligence LLM and involves a small cost.

With these features available within screen readers, the goalposts have been given a huge shove up the field. Descriptions of photos, images on a webpage or in a document can be obtained.

Implications

As those who have applied themselves conscientiously to writing alt text know, it is not always a simple task. The abovementioned tools and others like them can produce a detailed description in a matter of seconds. Keeping in mind that alt text should be meaningful in the context of the situation, an automated tool may not always get the emphasis right. Even then though, it will usually serve as a great starting point. While it may be a little premature to make this suggestion, these tools may redefine the approach to writing alt text. As the technology develops and becomes widely available, a brief description of an image could allow the reader to choose whether to seek out the robot’s description. Or, depending on the nature of the site and/or the enthusiasm of the author, a retrieved description could form the basis of an extended description on the site.

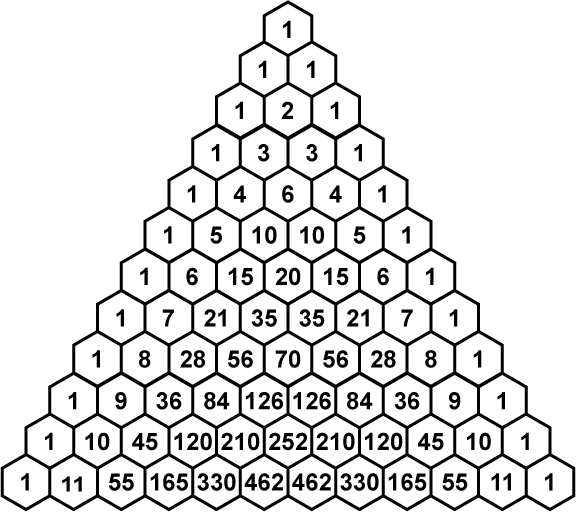

Implications go well beyond detailed descriptions of family photos and picturesque landscape. I have not yet seriously put the robots to the test on charts and graphs. The following result obtained with AI Object Describer on an image of Pascal’s triangle is impressive though.

This image depicts Pascal’s Triangle, a triangular array of numbers. Each number in the triangle is the sum of the two numbers directly above it. The top of the triangle has a single “1.” Below this first layer, each line starts and ends with “1”. As the rows progress, internal numbers grow larger, representing binomial coefficients. The fifth row from the top, for example, reads “1, 5, 10, 10, 5, 1” which corresponds to the coefficients in the expansion of a binomial expression raised to the fourth power. Pascal’s Triangle has applications in algebra, probability, combinatorics, and geometry. The numbers are arranged in a symmetrical pattern, and the image uses a plain, high-contrast color scheme with white numbers on a black background to enhance clarity and readability.

Alt text should not present information that is not available from the image. But in this AI world, it is arguable that the robot can show off its knowledge. As the reader, I can decide which parts to keep.

In a presentation at the 2018 OZeWAI Conference, I showed my best effort at writing alt text for the above image and, without showing the image, asked the audience if it was helpful. I received a resounding “No”. Looking back on my effort (no, I won’t share it here), an edited version of the above description would have been a vast improvement. One aspect of that presentation was to emphasise the importance of physical images. Even the above description has shortcomings compared to a tactile representation which, as of now, is not quite possible online.

Making it personal

I recently received a handwritten greeting card. Placing it on the table, I opened Be My AI and aimed the phone’s camera at it. The response included a description of some surrounding objects, told me the writing was in blue pen and provided an accurate rendition of the text. Similarly, a blind person I know reported that he was able to read all his Christmas cards independently for the first time.

Just one curious aside. I submitted the photo of my greeting card to Copilot. The description was consistent with Be My AI and Seeing AI except for the text. On several attempts, it simply composed its own, completely different, text. Perhaps there is some sort of weird privacy thing going on.

I have been getting a real buzz by going through old photos and getting impressively graphic descriptions. The next photo was taken towards the end of a day’s 600K ride in 40 degree heat. My son and I had just traversed what is laughingly called the Bogang Highway (half is unsealed), which descends from Nimmitabel high in the Snowy Mountains in NSW to Orbost, just over the border in Victoria.

This image features a motorcyclist standing next to a road sign. The motorcyclist is wearing a full-body riding suit and a full-face helmet with a colorful design. The road sign is bright red with reflective yellow panels and indicates a winding road ahead for the next 105 kilometers and advises to reduce speed. The sign is in good condition and prominently placed beside the road, which can be seen trailing off in the background with a visible curve to the left. The environment is natural, with tall trees and some underbrush indicating a rural setting. A motorcycle is parked right next to the person at the edge of the road. It has panniers attached to the sides and a top case, suggesting this might be a long-distance touring or adventure-type bike. This scene seems to capture a moment during a motorcycling adventure, likely in a remote or rural area.

Interestingly, the description varies a little on repeated submissions of the photo. Perhaps a different robot has come on shift.

Where to from here?

In 2014 the K-NFB Reader was released for the iPhone at a stratospheric price of $99US. It provides quite good optical character recognition (OCR). I cannot remember the last time I used it due to inexpensive and free apps that do, in my experience, a better job. And they are now reading handwriting, which was once considered an impossibility. There have been tools around for a decade or so that could identify individual items by referring to a database. But I could not have guessed even a couple of years ago that entire scenes could be described with the level of accuracy and feeling demonstrated above.

There is potential for even more meaningful descriptions of all manner of items. Copilot, for example, already allows the user to accompany an image with a request whose wording indicates the type of information required. The potential exists, then, to ask for an analysis of a chart or graph if the author has not provided one.

As touched on above, the importance of tactile images should not be under estimated. Taking an image intended for visual consumption and converting to a tactile representation requires specific knowledge. What if the robot could do the job? What if I could provide the robot with relevant information and ask for a suitable graphic? “Give me a map for swell paper printing of …”

If possibilities are not endless, they are extensive. I never thought I would appreciate my phone’s camera so much.